AI Governance

Adopt GenAI without losing control of your sensitive data

Read their Stories

Trusted by

Generative AI reuses sensitive data beyond the limits of legacy permissions, dramatically expanding the enterprise risk surface and exposing organizations to uncontrolled access and data loss.

Uncontrolled AI access to sensitive data

GenAI tools can access and reuse regulated and confidential data across cloud and on-prem environments, often without clear visibility or policy guardrails.

Identity sprawl magnifies AI risk

Over-permissioned users, guest accounts, service accounts and stale identities dramatically expand the blast radius when AI tools surface data.

Legacy permissions weren’t built for GenAI

Hidden access paths, misconfigurations, and excessive privileges allow AI to expose far more data than originally intended.

No visibility into AI-driven activity

Without real-time monitoring of prompts, data usage and anomalous behavior, sensitive information can be surfaced, shared or exfiltrated before security teams react.

Use cases

Secure AI by reducing sensitive data exposure

Discover sensitive data before AI does

Continuously scan file servers and cloud repositories to identify regulated, confidential, and business-critical data before copilots or LLMs can access and reuse it.

Classify data with AI-aware context

Apply high-fidelity classification to detect semantic sensitivity, legal strategy, intellectual property, and regulated content so AI systems respect business and compliance boundaries.

Reduce overexposed data access

Identify excessive, inherited, and stale permissions across repositories, then remediate risky access paths to shrink the blast radius before AI amplifies exposure.

Enforce least privilege for AI data

Align access with business need using automated entitlement reviews, just-in-time privilege, and identity context to prevent sensitive datasets from broad or unintended AI access.

Prevent sensitive data in LLM prompts

Detect and block regulated or confidential content pasted into public AI tools, reducing accidental leakage, shadow AI misuse, and AI-driven data exfiltration.

Monitor and respond to AI-driven risk

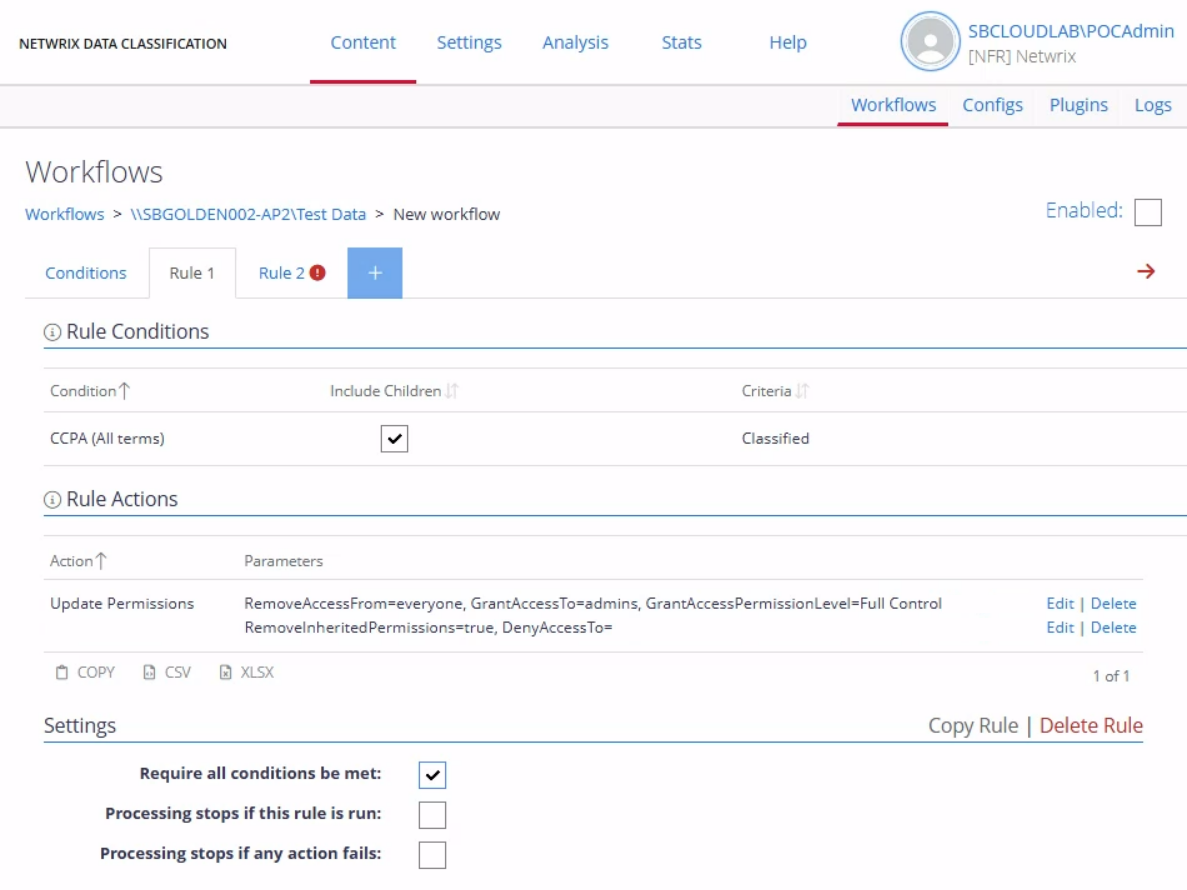

Correlate data sensitivity, identity activity, and abnormal access patterns to detect risky AI behaviors early and trigger automated containment and remediation workflows.

The Netwrix approach

Control sensitive data before AI amplifies risk

Why Netwrix for AI governance?

Generative AI is transforming enterprise IT—but it also reuses sensitive data in ways legacy permissions were never designed to control. The result is a dramatically expanded risk surface for CISOs. Netwrix secures GenAI adoption by exposing sensitive and shadow data, revealing risky access, and reducing data exposure before AI amplifies it

Capabilities that deliver AI Governance.