12 Critical Shadow AI Security Risks Your Organization Needs to Monitor in 2026

Feb 13, 2026

Shadow AI introduces security and compliance risk when employees use unapproved AI tools that move sensitive data outside organizational control. Because AI adoption outpaces governance, organizations face exposure across data leakage, audit gaps, agentic behavior, and model-level attacks. Effective shadow AI governance requires visibility into data and identity, risk-based classification, and controls that enable secure AI use without slowing teams.

What data are your employees feeding into unapproved AI tools? If you can't answer that question, then you might have shadow AI security risks that you don't know about.

The Netwrix Cybersecurity Trends Report 2025 found that 37% of organizations have already had to adjust their security strategies due to AI-driven threats, while 30% haven't started AI implementation at all. That gap between how fast AI threats are evolving and how slowly organizations are responding is where shadow AI thrives.

AI at work isn't the problem. Using it outside IT-approved channels is. When employees adopt AI tools without oversight, the risks compound fast: data leaks through unvetted models, compliance gaps that surface during audits, and exposure pathways security teams can't monitor.

The faster you can name those specific risks, the faster you can build a response that balances enablement with protection. In this article, we'll walk you through what shadow AI security risks are, the 12 risks you need to monitor, and how to assess and prioritize them."

What are shadow AI security risks?

Shadow AI is a subset of shadow IT that refers specifically to the use of AI tools or platforms without IT department approval or oversight. A shadow AI security risk happens whenever company data moves through AI tools and channels that IT cannot see or control.

Shadow AI generally happens because employees want to take advantage of existing systems to speed up their work. But when a sales rep pastes a customer list with names and emails into ChatGPT to draft personalized outreach messages, that data now lives on servers outside your security perimeter. This introduces risks that security teams can’t monitor or mitigate.

The repercussions of shadow AI can be steep. Organizations with high shadow AI usage experience breach costs averaging $4.63 million, which is $670,000 more per breach than those with low or no usage.

Here's what makes shadow AI difficult to manage:

- It hides in plain sight: Shadow AI is often embedded in browser extensions, plugins, or AI-enabled features within already-approved SaaS tools, making it invisible to traditional IT discovery methods.

- It processes data through prompts: Unlike traditional software, shadow AI tools receive sensitive data through natural language inputs that get processed and potentially stored on third-party servers.

- Traditional frameworks don't cover it: While cybersecurity frameworks like NIST CSF, ISO 27001, and CIS Controls remain essential foundations, they weren't designed with AI-specific data flows in mind. This means the detection methods, exposure pathways, and governance requirements are fundamentally different from traditional shadow IT.

- Autonomous agents add complexity: Shadow AI can include agents that make decisions and take actions beyond simple data access, creating unpredictable security exposure.

These characteristics are why shadow AI can't be managed with the same playbook as traditional shadow IT. The detection methods, data exposure pathways, and governance gaps are all different.

The 12 critical shadow AI security risks

Shadow AI security risks can exist independently, but in practice, they compound. An employee using a personal AI account to process regulated data through a tool with no audit logging, for example, creates a compliance exposure that's greater than any single risk alone.

1. Unauthorized data exposure to third-party AI models

Every prompt sent to a third-party AI model is data leaving your environment. Unless the tool has been vetted and approved, you have no control over how that data is stored, used for model training, or retained. That's the core risk: sensitive information flowing to servers outside your security perimeter with no guardrails in place.

Think about what happens when your employees paste customer data into ChatGPT to draft a response faster. That prompt might contain proprietary code, financial projections, M&A documents, or customer PII, all of which get processed and potentially stored on third-party servers outside your control.

2. Personal account usage bypassing enterprise controls

This risk is surprisingly common, and employees often don't realize the difference between their personal and work AI accounts. When employees use their personal ChatGPT or Claude accounts for work tasks, you lose visibility, audit trails, and any ability to enforce data handling policies.

3. Governance framework gaps

AI tool adoption consistently outpaces the governance frameworks meant to manage it. When employees can sign up for a new AI tool in minutes, but your approval process takes weeks, the gap fills itself with shadow AI.

As a result, your security team can't enforce policies on tools they don't know exist, and your legal team can't review data handling terms for services nobody reported adopting.

4. Unsanctioned agentic AI and tool integrations

An emerging category of shadow AI risk encompasses unsanctioned AI models, tools, and autonomous agents integrated into business workflows. This includes plugins, Model Context Protocol (MCP) servers, and LangChain tools that can access production data beyond your security visibility.

Unlike traditional shadow IT, these agents make autonomous decisions, chain actions across systems, and can escalate their own privileges depending on how they're configured.

5. Prompt injection attacks

Prompt injection exploits a fundamental design flaw in large language models: user input and system instructions are processed as the same type of data. An attacker who crafts the right input can extract sensitive information, manipulate AI outputs, or trigger unauthorized actions, all through a conversational interface that wasn't designed with adversarial use in mind.

6. System prompt leakage exposing credentials

When AI tools are configured with API keys, database credentials, or other secrets embedded in their system prompts, prompt engineering attacks can extract them. A conversational interface becomes an attack vector when the right input causes the model to reveal information that was supposed to remain hidden.

7. AI supply chain poisoning

The AI supply chain introduces novel risk pathways through malicious dependencies in pre-trained models, datasets, and ML frameworks. These target AI developers and LLM integrations specifically, and they're difficult to detect with standard software composition analysis.

Because shadow AI tools bypass your vetting process entirely, your team has no opportunity to evaluate the provenance of models or training data before employees start feeding them sensitive inputs.

8. High-risk applications with inadequate security controls

Shadow AI applications adopted without IT vetting often lack fundamental security controls, including encryption, multi-factor authentication, audit logging, and data residency guarantees. Without these controls, sensitive data processed through these tools has no protection at rest or in transit.

9. Compliance evidence gaps for AI-specific controls

Your existing compliance program likely generates evidence for traditional access controls, change management, and data handling. But when auditors ask how you govern AI tool usage, what data employees send through AI prompts, or how you monitor AI agent behavior, most organizations have nothing to show.

This gap only surfaces when someone asks for it, and by then, the audit finding is already written.

10. Inadequate logging and visibility

Without a logging infrastructure for AI interactions, you can't detect anomalous behavior or conduct effective incident investigations. Inadequate audit logging violates PCI DSS Requirement 10, HIPAA's audit controls requirement (45 CFR §164.312(b)), and SOC 2 CC7.2.

11. AI agents, plugins, and browser extensions

AI agents, browser extensions, and plugins introduce risk at the integration layer. Each one operates with its own permissions, connects to external systems, and processes data in ways that are difficult to monitor at scale.

An extension that requests broad permissions can access session tokens, read page content, or exfiltrate data through background connections, and most organizations have no visibility into which extensions employees have installed.

12. Intellectual property contamination and algorithmic bias

Shadow AI usage creates intellectual property risks as proprietary data trains commercial models outside organizational control. When employees paste source code, product roadmaps, or customer data into unapproved AI tools, that information may be incorporated into model training, making it potentially accessible to other users or competitors.

Separately, organizations face algorithmic bias liability if employees use unauthorized AI tools for employment decisions or customer-facing interactions. The organization may be held responsible for discriminatory outcomes regardless of whether leadership approved the tool, making unsanctioned AI a legal exposure that extends well beyond data security.

How to assess and prioritize shadow AI risks

If your organization doesn't have a formal IT risk assessment process yet, start there. But shadow AI introduces risks that traditional frameworks weren't built to catch, so you'll need an AI-specific layer.

The NIST AI Risk Management Framework provides that structure through four functions: Govern, Map, Measure, and Manage. Start by classifying discovered tools by data handling risk:

- Critical risk: Tools processing regulated data (PCI, PHI, PII). Require immediate action.

- High risk: Tools with access to proprietary business data. Require evaluation and controls.

- Medium risk: Tools processing internal but non-sensitive data. Require policy coverage.

- Low risk: Tools with no sensitive data access. Require monitoring only.

Once you've classified each tool by data handling risk, the next step is mapping those classifications against the specific compliance requirements your organization is subject to.

This is where shadow AI creates the sharpest audit exposure: a tool classified as "critical risk" that processes cardholder data, for example, triggers specific logging and access control mandates that you can't meet if you don't know the tool exists.

For regulated industries, map discovered shadow AI tools against specific compliance requirements:

- PCI DSS Requirement 10 mandates logging of access to cardholder data environments

- HIPAA audit controls (45 CFR §164.312(b)) require tracking PHI access

- SOC 2 CC7.2 requires monitoring system components for anomalies

- GDPR Article 28 requires documented data processing agreements with any processor handling personal data

A visibility-first governance model provides the most practical sequence: detect all AI tools in use, classify them by data handling risk, restrict high-risk tools, and provide secure approved AI alternatives.

Securing shadow AI agents, plugins, and integrations

According to McKinsey's deployment study, 80% of organizations have already encountered risky behaviors from AI agents, including improper data exposure and unauthorized system access.

The integration layer is where you have the most control. Here's where to start:

- Implement least-privilege architecture for AI agents: Restrict agent permissions to only the specific systems and data required for their defined tasks. A customer service agent should access only ticket data and knowledge bases, not financial systems or HR records. Review and document every permission grant, and implement time-limited access that expires automatically.

- Vet plugins and extensions before deployment: Before approving any AI plugin or browser extension, evaluate the vendor's security practices, data handling policies, and permission requirements. Look for extensions requesting broad permissions beyond what their stated function requires, unclear data retention policies, or vendors without documented security certifications.

- Enforce browser extension controls: Implement allowlisting for approved browser extensions through group policy or endpoint management. Monitor for unauthorized extension installations and create alerts when new AI-related extensions appear on managed devices.

- Secure Model Context Protocol servers and LangChain tools: These integration points can access production data beyond your security visibility. Implement logging for all agent actions, monitor for data access patterns that exceed expected behavior, and require security review before connecting any new integration to enterprise systems.

- Log agent actions comprehensively: Since many companies can't track AI agent data usage, implement detailed logging that captures what data each agent accesses, what actions it takes, and what external systems it connects to. This logging is essential for both security monitoring and compliance evidence.

Doing all of this manually across a hybrid environment is where most teams stall. The controls above are clear, but executing them requires visibility into both your data and your identities in a single view.

How Netwrix supports shadow AI security

Shadow AI governance breaks down when you can see your data but not who's accessing it, or when you can track identities but not what sensitive data they're touching. You need both views at once, and that's the problem Netwrix solves.

The Netwrix platform provides data security posture management (DSPM) through 1Secure and data discovery and classification through Access Analyzer, covering sensitive data across hybrid environments, including sources Microsoft-native tools don't reach, such as NetApp storage arrays and Amazon S3 buckets via Access Analyzer's 40+ data collection modules.

For shadow AI specifically, 1Secure provides visibility into Microsoft Copilot exposure by reporting which sensitive data Copilot can access and surfacing risk assessments to support informed AI rollout decisions.

On the identity side, Netwrix’s identity threat detection and response (ITDR) capabilities surface unusual data access spikes, permission changes, or failed authentication patterns that could indicate shadow AI tool usage.

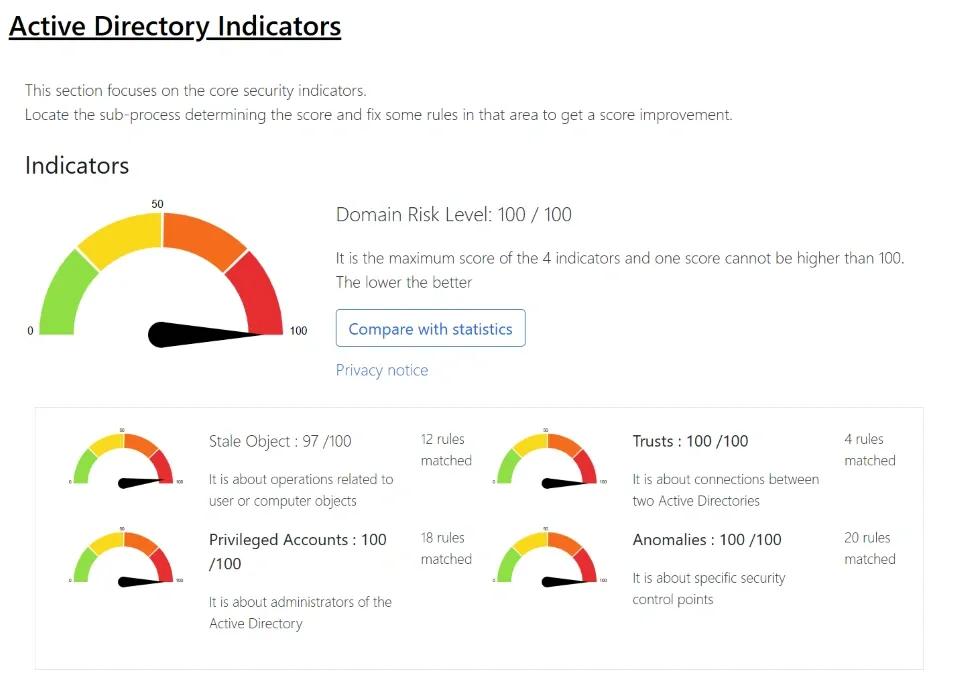

Risk assessment dashboards highlight the identity hygiene issues that make shadow AI more dangerous. Think dormant accounts, excessive privileges, and synchronization gaps between on-premises Active Directory and Entra ID.

Netwrix is built for fast time-to-value. 1Secure delivers high-impact outcomes from day one with no complex deployments, while Netwrix Auditor provides ready-to-use, human-readable reports so teams can start answering audit questions about AI-related data access in minutes rather than hours.

Compliance reporting maps directly to PCI DSS, HIPAA, SOC 2, GDPR, and CMMC frameworks, so the evidence your auditor asks for is a report pull, not a manual evidence-gathering exercise.

If your team needs visibility into shadow AI risk but can't wait for a six-month implementation project, you need a platform that starts delivering answers on day one. Request a Netwrix demo to get started.

Frequently asked questions about shadow AI security risks

Share on

Learn More

About the author

Dirk Schrader

VP of Security Research

Dirk Schrader is a Resident CISO (EMEA) and VP of Security Research at Netwrix. A 25-year veteran in IT security with certifications as CISSP (ISC²) and CISM (ISACA), he works to advance cyber resilience as a modern approach to tackling cyber threats. Dirk has worked on cybersecurity projects around the globe, starting in technical and support roles at the beginning of his career and then moving into sales, marketing and product management positions at both large multinational corporations and small startups. He has published numerous articles about the need to address change and vulnerability management to achieve cyber resilience.

Learn more on this subject

Non-human identities (NHIs) explained and how to secure them

Data Privacy Laws by State: Different Approaches to Privacy Protection

What Is Electronic Records Management?

Regular Expressions for Beginners: How to Get Started Discovering Sensitive Data

External Sharing in SharePoint: Tips for Wise Implementation